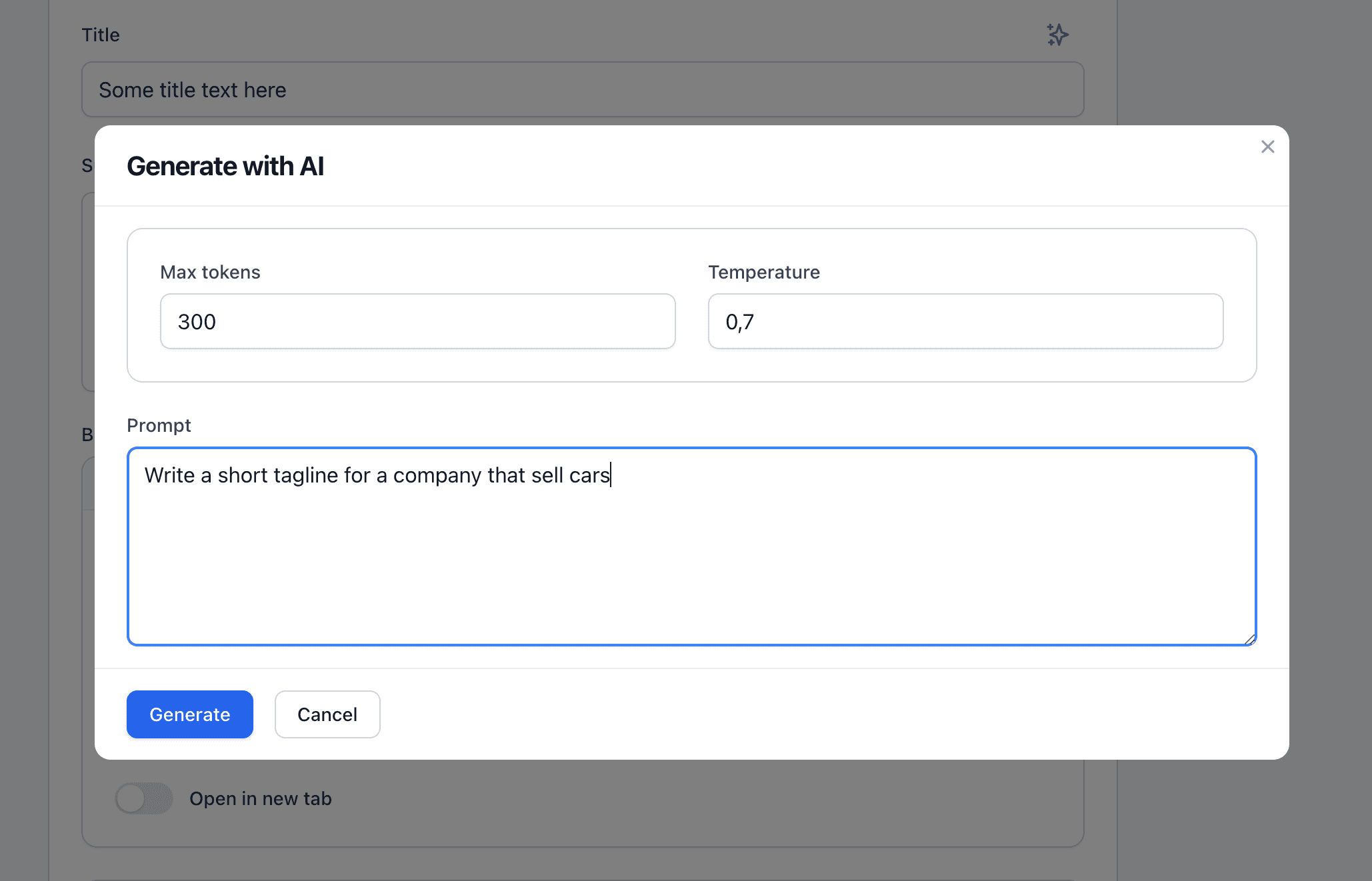

This guide will show how to add a modal with a fields for prompt, max_token and temperature.

These fields will be passed along to the OpenAI GPT-3 "complete" endpoint and used as the value for any field we want.

First we need to install the OpenAI laravel package, follow the installation guide, which in TL;DR form is the following:

composer require openai-php/laravel

Then add the OPENAI_API_KEY environment value to your .env file:

OPENAI_API_KEY=replace-me-with-your-openai-api-key

Now lets add a Macro to the Filament\Forms\Components\Field class:

AppServiceProvider.php

<?php

use Filament\Forms\Components\Actions\Action;

use Filament\Forms\Components\Card;

use Filament\Forms\Components\Field;

use Filament\Forms\Components\Textarea;

use Filament\Forms\Components\TextInput;

use OpenAI\Laravel\Facades\OpenAI;

Field::macro('withAI', function ($prompt = null) {

return $this->hintAction(

function (Closure $set, Field $component) use ($prompt) {

return Action::make('gpt_generate')

->icon('heroicon-o-sparkles')

->label('Generate with AI')

->form([

Card::make([

TextInput::make('max_tokens')

->label('Max tokens')

->default(300)

->numeric(),

TextInput::make('temperature')

->numeric()

->label('Temperature')

->default(0.7)

->maxValue(1)

->minValue(0)

->step('0.1'),

])->columns(2),

Textarea::make('prompt')

->label('Prompt')

->default($prompt),

])

->modalButton('Generate')

->action(function ($data) use ($component, $set) {

try {

$result = OpenAI::completions()->create([

'model' => 'text-davinci-003',

'prompt' => $data['prompt'],

'max_tokens' => (int)$data['max_tokens'],

'temperature' => (float)$data['temperature'],

]);

$generatedText = $result['choices'][0]['text'];

$set($component->getName(), $generatedText);

} catch (\Throwable $exception) {

Notification::make()

->title('Text generation failed')

->body('Error: ' . $exception->getMessage())

->danger()

->send();

}

});

}

);

});

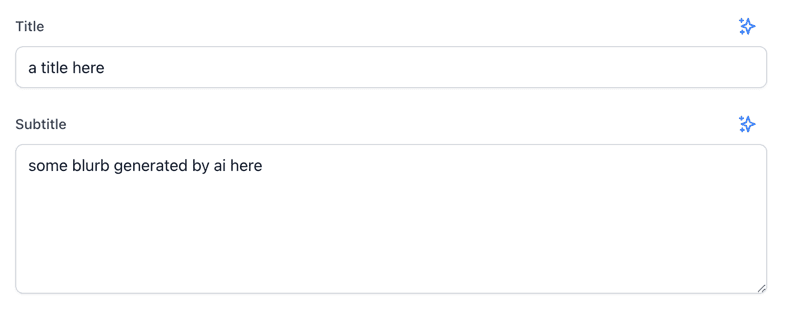

And now we can use it like this in any of our fields!

TextInput::make('title')->withAI(),

And since we allowed the initial prompt to be edited, we can customize it like so:

Textarea::make('subtitle')

->withAI("Write a short compelling text intro for..."),

The macro idea was inspired by Hörmann Bernhard's tips named "Create a Tooltip macro".